Node v1.3.2 stopped working on its own. It took me a while to notice it because network traffic continued to look normal. I restarted the node service first and only later realized that /opt/xxnetwork/node-logs/node-err.log got wiped by that (if it was written as the log suggests).

DEBUG 2020/07/21 10:51:41 Successfully contacted local address!

INFO 2020/07/21 10:51:41 Adding dummy users to registry

INFO 2020/07/21 10:51:41 Waiting on communication from gateway to continue

INFO 2020/07/21 10:51:42 Communication from gateway received

INFO 2020/07/21 10:51:42 Updating to WAITING

INFO 2020/07/21 10:51:44 Updating to ERROR

FATAL 2020/07/21 10:51:44 Error encountered - closing server & writing error to /opt/xxnetwork/node-logs/node-err.log: Received error polling for permisioning: Issue polling permissioning: rpc error: code = Unknown desc = Round has failed, state cannot be updated

gitlab.com/elixxir/comms/node.(*Comms).SendPoll.func1

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/node/register.go:62

gitlab.com/elixxir/comms/connect.(*Host).send

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/connect/host.go:206

gitlab.com/elixxir/comms/connect.(*ProtoComms).Send

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/connect/comms.go:285

gitlab.com/elixxir/comms/node.(*Comms).SendPoll

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/node/register.go:69

gitlab.com/elixxir/server/permissioning.PollPermissioning

/builds/elixxir/server/permissioning/permissioning.go:159

gitlab.com/elixxir/server/permissioning.Poll

/builds/elixxir/server/permissioning/permissioning.go:69

gitlab.com/elixxir/server/node.NotStarted.func1

/builds/elixxir/server/node/changeHandlers.go:192

runtime.goexit

/usr/lib/go-1.13/src/runtime/asm_amd64.s:1357

Failed to send

gitlab.com/elixxir/server/permissioning.PollPermissioning

/builds/elixxir/server/permissioning/permissioning.go:161

gitlab.com/elixxir/server/permissioning.Poll

/builds/elixxir/server/permissioning/permissioning.go:69

gitlab.com/elixxir/server/node.NotStarted.func1

/builds/elixxir/server/node/changeHandlers.go:192

runtime.goexit

/usr/lib/go-1.13/src/runtime/asm_amd64.s:1357

panic: Error encountered - closing server & writing error to /opt/xxnetwork/node-logs/node-err.log: Received error polling for permisioning: Issue polling permissioning: rpc error: code = Unknown desc = Round has failed, state cannot be updated

gitlab.com/elixxir/comms/node.(*Comms).SendPoll.func1

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/node/register.go:62

gitlab.com/elixxir/comms/connect.(*Host).send

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/connect/host.go:206

gitlab.com/elixxir/comms/connect.(*ProtoComms).Send

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/connect/comms.go:285

gitlab.com/elixxir/comms/node.(*Comms).SendPoll

/root/go/pkg/mod/gitlab.com/elixxir/[email protected]/node/register.go:69

gitlab.com/elixxir/server/permissioning.PollPermissioning

/builds/elixxir/server/permissioning/permissioning.go:159

gitlab.com/elixxir/server/permissioning.Poll

/builds/elixxir/server/permissioning/permissioning.go:69

gitlab.com/elixxir/server/node.NotStarted.func1

/builds/elixxir/server/node/changeHandlers.go:192

runtime.goexit

/usr/lib/go-1.13/src/runtime/asm_amd64.s:1357

Failed to send

gitlab.com/elixxir/server/permissioning.PollPermissioning

/builds/elixxir/server/permissioning/permissioning.go:161

gitlab.com/elixxir/server/permissioning.Poll

/builds/elixxir/server/permissioning/permissioning.go:69

gitlab.com/elixxir/server/node.NotStarted.func1

/builds/elixxir/server/node/changeHandlers.go:192

runtime.goexit

/usr/lib/go-1.13/src/runtime/asm_amd64.s:1357

goroutine 64 [running]:

log.(*Logger).Panic(0xc0002e8af0, 0xc000259738, 0x1, 0x1)

/usr/lib/go-1.13/src/log/log.go:212 +0xaa

gitlab.com/elixxir/server/internal.CreateServerInstance.func1(0xc0001eae00, 0x647)

/builds/elixxir/server/internal/instance.go:114 +0x7f

gitlab.com/elixxir/server/node.Error(0xc000094a80, 0xb38004, 0x5ec301)

/builds/elixxir/server/node/changeHandlers.go:419 +0x45c

gitlab.com/elixxir/server/cmd.StartServer.func8(0xc000000001, 0xc0002598a4, 0x1)

/builds/elixxir/server/cmd/node.go:122 +0x2d

gitlab.com/elixxir/server/internal/state.Machine.stateChange(0xc0002cfd18, 0xc0002d15c0, 0xc000255160, 0xda6750, 0xc000255170, 0xda6758, 0xc000255180, 0xda6760, 0xc000255190, 0xda6768, ...)

/builds/elixxir/server/internal/state/state.go:400 +0x7d

gitlab.com/elixxir/server/internal/state.Machine.Update(0xc0002cfd18, 0xc0002d15c0, 0xc000255160, 0xda6750, 0xc000255170, 0xda6758, 0xc000255180, 0xda6760, 0xc000255190, 0xda6768, ...)

/builds/elixxir/server/internal/state/state.go:204 +0x1ad

gitlab.com/elixxir/server/internal.(*Instance).reportFailure(0xc000094a80, 0xc000354b40)

/builds/elixxir/server/internal/instance.go:589 +0x3e1

gitlab.com/elixxir/server/internal.(*Instance).ReportRoundFailure(0xc000094a80, 0xe94440, 0xc0003e4300, 0xc0000399e0, 0x0)

/builds/elixxir/server/internal/instance.go:552 +0x257

gitlab.com/elixxir/server/internal.(*Instance).ReportNodeFailure(...)

/builds/elixxir/server/internal/instance.go:521

gitlab.com/elixxir/server/node.NotStarted.func1(0xc000094a80)

/builds/elixxir/server/node/changeHandlers.go:196 +0x4b7

created by gitlab.com/elixxir/server/node.NotStarted

/builds/elixxir/server/node/changeHandlers.go:164 +0x698

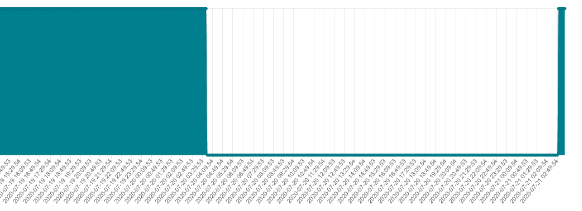

Restarting binary due to error in the wrapper log seems quite frequent.

[INFO] 21-Jul-20 10:51:34: Executing command: {'command': 'start', 'nodes': None}

[INFO] 21-Jul-20 10:51:34: /opt/xxnetwork/bin/xxnetwork-node started at PID 23072

[INFO] 21-Jul-20 10:51:34: Completed command: {'command': 'start', 'nodes': None}

[WARNING] 21-Jul-20 10:51:45: Restarting binary due to error...

[INFO] 21-Jul-20 10:51:45: /opt/xxnetwork/bin/xxnetwork-node started at PID 23116

[WARNING] 21-Jul-20 10:55:37: Restarting binary due to error...

[INFO] 21-Jul-20 10:55:37: /opt/xxnetwork/bin/xxnetwork-node started at PID 23348

[WARNING] 21-Jul-20 10:57:56: Restarting binary due to error...

[INFO] 21-Jul-20 10:57:56: /opt/xxnetwork/bin/xxnetwork-node started at PID 23515

[WARNING] 21-Jul-20 11:00:26: Restarting binary due to error...

[INFO] 21-Jul-20 11:00:26: /opt/xxnetwork/bin/xxnetwork-node started at PID 23678

[WARNING] 21-Jul-20 11:00:38: Restarting binary due to error...

[INFO] 21-Jul-20 11:00:38: /opt/xxnetwork/bin/xxnetwork-node started at PID 23721

Any suggestion on how to debug or gather helpful diag info for this?